An introduction to Learning Analytics *

Pierre-Julien Guay collaborates in the development of the ISO/IEC 20748 series of standards for learning analytics as a part of the JTC1 ISO/IEC SC36 Committee tasked with the development of international standards in the field of information technology for education, training and learning. Alexandre Enkerli explores issues surrounding the use of learning data in the Quebec collegiate environment.

Introduction

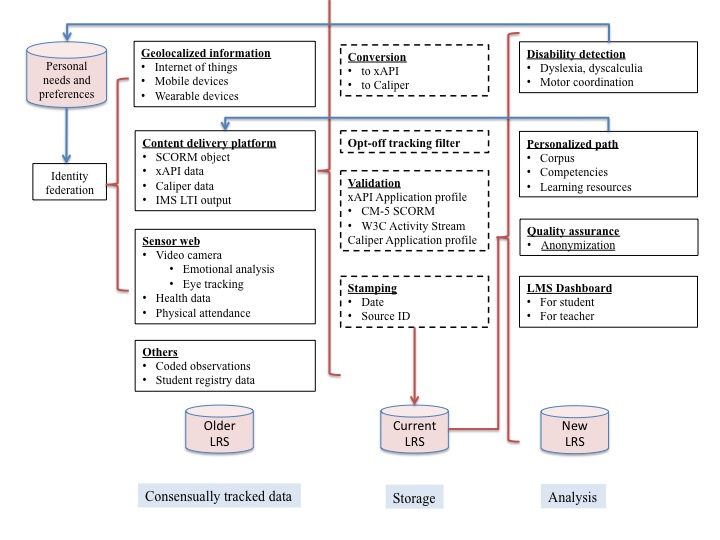

Many types of learning activities are currently being conducted using sophisticated digital tools. This technology is essential to the online courses that have come to dominate remote learning. It has also become an increasingly important part of in class training, an essential part of informal learning and in-company training.

These educational materials are distributed via a broad range of platforms and mediums including the Web (accessible by desktop computer as well as by smartphone), portable technology (sensors, smart watches) and even the Internet of Things. It is now possible to harvest data from a significant portion of learning activities and analyze their progression.

This article, after establishing the uses for these analyses, will examine the different types of data gathered and the pre-processing used for its analysis.

Applications of learning analytics

The most common use for data on learning is the construction of a dashboard that compares the learning pathways of a single learner with larger groups that can vary in size from a single team to a class group or even an entire establishment. This type of tool is used in environments such as Moodle (the Learning Analytics Enriched Rubric module and SmartKlass™), Brightspace as well as in tools supplied by large American publishers such as Pearson Learning Studio.

The learner’s dashboard charts their position using several aspects including: level of progression, test score histograms and the number of visits. One of its principal goals is to involve the learners in managing and planning their own activities.

The instructor’s dashboard allows for the screening of learners having difficulty based on pre-established criteria. This allows the instructor to act promptly and provide the means required to help. Some tools even go so far as to claim predictive analyses as the frequency and duration of visits over the first three weeks of a course appear to be a reliable predictor of success or failure for a given course.

Another application consists of rapidly detecting learning difficulties in individual learners. Language problems (dysorthographia and dyslexia), arithmetic problems (dyscalculia) and even motor skills problems (dyspraxia) can skew learning achievements. Rapid detection and remediation can make a notable difference; especially when the intervention takes into account all the factors resulting from the assessment.

In certain cases, instructors and administrators can even access a broader pool of data for all learners in a single organization. As a result, managers can assign employee-specific training and instructors can corroborate with other colleagues on the absentee rate of a specific learner in their course.

Analyses of class groups can also be performed as a straightforward quality assurance measure. Because this is no longer an individual coaching effort at this point, best practises should include the dissociation of personal information from learning pathways to preserve anonymity, ensure data confidentiality and protect the privacy of [1] all associated parties (including the relatives and associates of learners).

Some promoters of learning analytics focus on automated pathways for personalized learning based on personal preferences and the material’s level of difficulty. However, this function requires access to a precise and stable representation of the curricula structure, a list of associated skills and a sizeable collection of digital resources with clearly defined parameters. For the time being, this information is rarely found in interoperable digital formats.

Rather than being interpreted as a solid structure of precise facts or robust prescriptions, the result of these analyses should be understood instead as a comment or rather a suggestion. Predictive patterns are based on a simplified model of learning and do not take into account the intrinsic complexity of individuals, their personal backgrounds or the greater context that surrounds learning.

Image : Data flow of learning analytics

Data collection

L earner X has sent a message to Y- Learner X has watched the 123.avi file in its entirety

Data processing and storage

Success Factors

- A non-consent option for the gathering and use of learning pathways and their automatic removal

- The use of a mechanism such as identity federation for all online services (registrar, library) and the external services used as a part of learning (social networks, YouTube, Google Apps for Education, Office 365, Adobe Creative Cloud, etc.)

- Policy analysis for each external service provider (data hosting site, length of archiving, use)

- The type of data collected, the time frame for its archiving and use—including research purposes

- A statement on the measures implemented to protect privacy

- A statement on compliance with laws on the protection of privacy and universal accessibility.

[1] In colloquial usage, it is common to fuse these distinct concepts. A strategic business plan must be confidential without being associated with private lives. Personal information, related to private life, can be provided without identifying the individuals from whom it was obtained.

* In collaboration with Alexandre Enkerli